Implementing the Horn and Schunck optical flow algorithm

Jupyter notebook:

A Jupyter notebook with the code in this article is available in Google Colab. Check it out!Background

The Horn and Schunck variational method for computing optical flow is one of the seminal works in the field. It introduces the idea of using a global smoothness constrain on the estimated optical flow. This constrain helps the numerical solution to find a good flow estimate even in image regions with poor texture.

Let $\mathbf{E}(x, y, t)$ be the image brightness at point $(x, y)$ and time $t$. Considering the constant brightness assumption, where the change in brightness is zero, that is,

$$ \frac{d \mathbf{E}}{d t} = 0 $$

Taking the partial derivatives over $(x, y, t)$, one has:

$$ \frac{\partial \mathbf{E}}{\partial x} \frac{\partial x}{\partial t} + \frac{\partial \mathbf{E}}{\partial y} \frac{\partial y}{\partial t} + \frac{\partial \mathbf{E}}{\partial t} = 0 $$

For convenience, let:

$$ \begin{align*} \mathbf{E}_x &= \frac{\partial \mathbf{E}}{\partial x} \\ \mathbf{E}_y &= \frac{\partial \mathbf{E}}{\partial y} \\ \mathbf{E}_t &= \frac{\partial \mathbf{E}}{\partial t} \end{align*} $$

be the image gradient in the $x$ and $y$ directions, and the partial derivative in time, respectively, and

$$ \begin{align} u &= \frac{\partial x}{\partial t} \\ v &= \frac{\partial y}{\partial t} \end{align} $$

be the $x$ and $y$ components of the optical flow, respectively. The constant brightness equation is then

$$ \mathbf{E}_x u + \mathbf{E}_y v + \mathbf{E}_t = 0 $$

which is the basis for the differential methods for computing optical flow (e.g. Lukas-Kanade).

Minimization

Differential methods for estimating optical flow try to minimize the cost function

$$ \epsilon_b = \mathbf{E}_x u + \mathbf{E}_y v + \mathbf{E}_t $$

that is, to try to find values $(u, v)$ of the optical flow such that the constant brightness constrain is maintained. Notice that there is a single cost funcion and two unknowns $(u, v)$. To solve this, the Horn and Schunck algorithm adds a smoothness constrain based on the average value of the flow in a neighborhood, as

$$ \epsilon_c^2 = (\bar{u} - u )^2 + (\bar{v} - v)^2 $$

Combining both cost functions, one has

$$ \epsilon^2 = \alpha^2 \epsilon_b^2 + \epsilon_c^2 $$

From these equations, a numerical solution is derived. The reader is encouraged to go to the paper for more details. The iterative solution for $(u, v)$ is

$$ \begin{align*} u^{n+1} &= \bar{u}^n - \mathbf{E}_x \frac{\mathbf{E}_x \bar{u}^n + \mathbf{E}_y \bar{v}^n + \mathbf{E}_t}{\alpha^2 + \mathbf{E}_x^2 + \mathbf{E}_y^2} \\ v^{n+1} &= \bar{v}^n - \mathbf{E}_y \frac{\mathbf{E}_x \bar{u}^n + \mathbf{E}_y \bar{v}^n + \mathbf{E}_t}{\alpha^2 + \mathbf{E}_x^2 + \mathbf{E}_y^2} \end{align*} $$

where $(u^{n+1}, v^{n+1})$ is the estimated optical flow at iteration $n + 1$, using the estimated flow at previous iterations and image parameters computed from an image pair.

Implementation

The figure below illustrates the pipeline implementing the algorithm:

@startuml

skinparam linetype ortho

state HS as "HornSchunck" {

state in_gray <<inputPin>>

state ImageProcessor

state ImageNormalize_uint_C1

state NI_1 as "NumericIteration 1"

state NI_2 as "NumericIteration 2"

state NI_3 as "NumericIteration 3"

state NI_N as "NumericIteration N"

in_gray -down-> ImageProcessor

in_gray -down-> ImageNormalize_uint_C1

ImageNormalize_uint_C1 -down-> ImageProcessor: in_gray_old

ImageProcessor -down-> NI_1: in_image_params

ImageProcessor -down-> NI_2

ImageProcessor -down-> NI_3

ImageProcessor -down-> NI_N: in_image_params

NI_1 -> NI_2

NI_2 -> NI_3

NI_3 -> NI_N: ...

NI_N -> NI_1: in_flow, used for next image iteration

NI_N -down-> out_flow <<outputPin>>

ImageNormalize_uint_C1 -down> out_gray <<outputPin>>

}

note top of HS

Parameters

----------

alpha : float. Defaults to 0.05.

Regularization gain.

iterations : int. Defaults to 1.

Number of iterations run to compute the optical flow.

float_precision : int. Defaults to ll.FloatPrecision.FP32.

Floating point precision used accross the algorithm. The outputs out_gray

and out_flow will be of this floating point precision.

end note

@enduml

The HornSchunck is a ContainerNode that instantiates several ComputeNode implementing the algorithm. In particular, the ImageProcessor node computes image parameters from the pair of images in_gray and in_gray_old. Those parameters are transfered to the instances of NumericIteration through in_image_params, organized as follows:

in_image_params.x: X component of the image gradientin_image_params.y: Y component of the image gradientin_image_params.z: temporal derivative betweenin_grayandin_gray_old.in_image_params.w: gain for this pixel computed from image gradient andalphaparameter.

This packaging of the image parameters is convenient as all values are packed together in a singe RGBA pixel. The floating point precision of this, and the estimated optical flow is controlled by the float_precision parameter.

The NumericIteration node takes the image parameters and a prior estimation of the optical flow, in_flow, and computes the next iteration of the flow field. The algorithm requires several iterations for the estimated flow to be of acceptable quality. In the figure above, the last iteration is denoted as NumericIteration_N and it feeds its output back as input to the first one, as well as the output of the HornSchunck node. The number of iterations is controlled by the iterations parameter.

The code block below shows how to run a simple pipeline:

@startuml

skinparam linetype ortho

state RGBA2Gray

state HS as "HornSchunck"

state Flow2RGBA

RGBA2Gray -down-> HS: in_gray

HS -down-> Flow2RGBA: in_flow

@enduml

where RGBA2Gray converts an input RGBA image to gray scale, HornSchunck computes the optical flow, and Flow2RGBA converts the optical flow to color representation.

| |

Evaluation on the Middlebury dataset

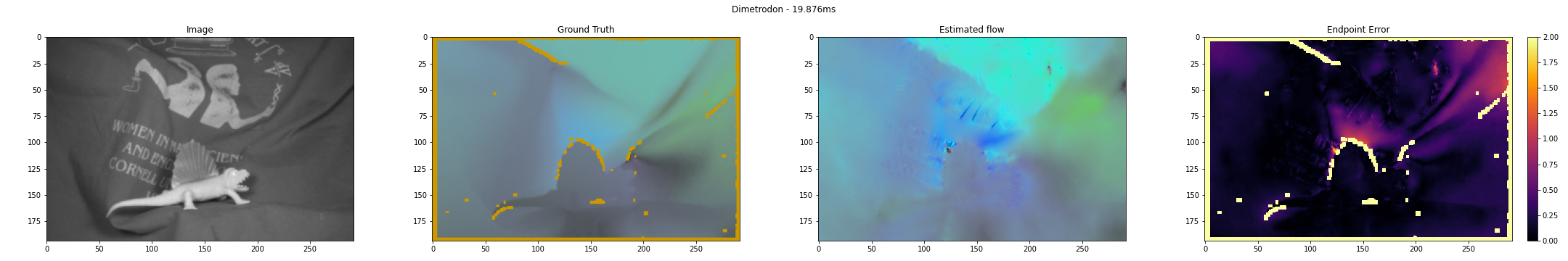

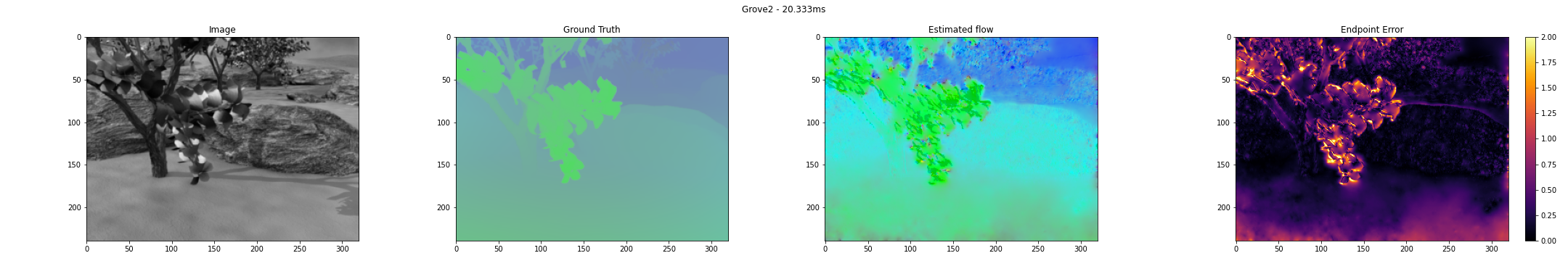

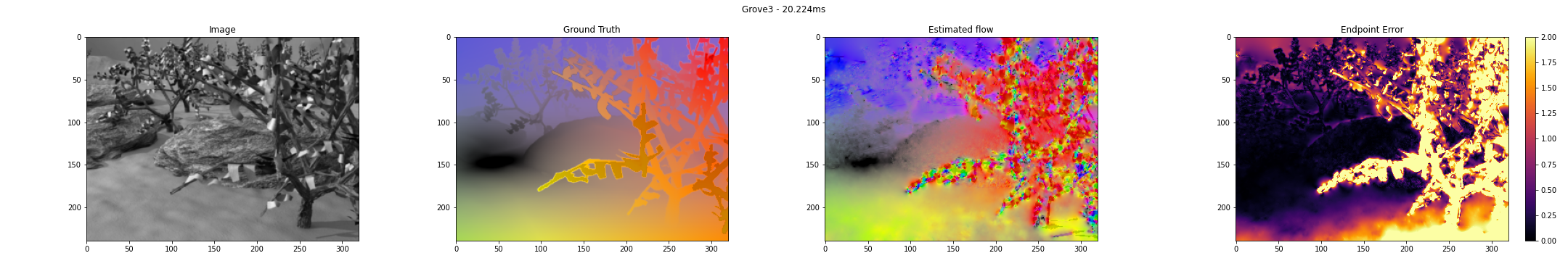

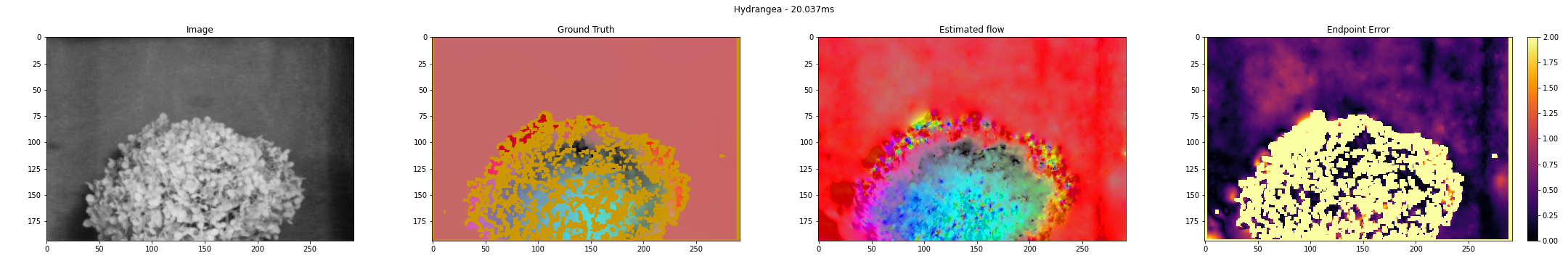

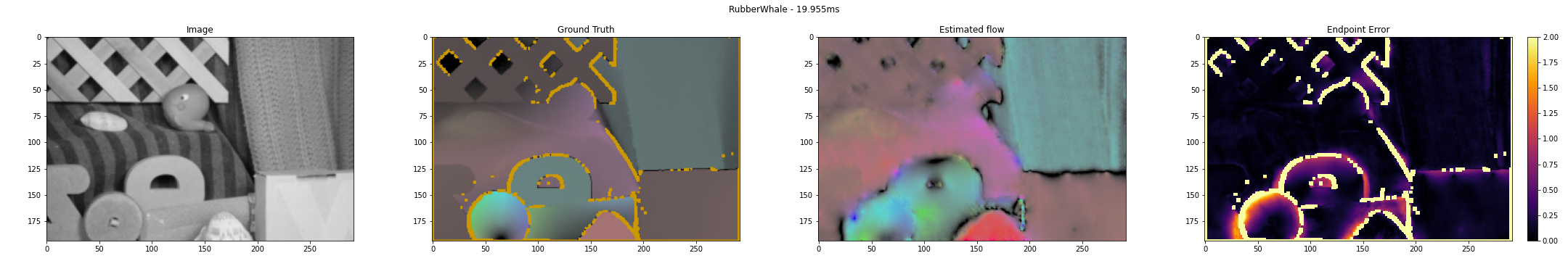

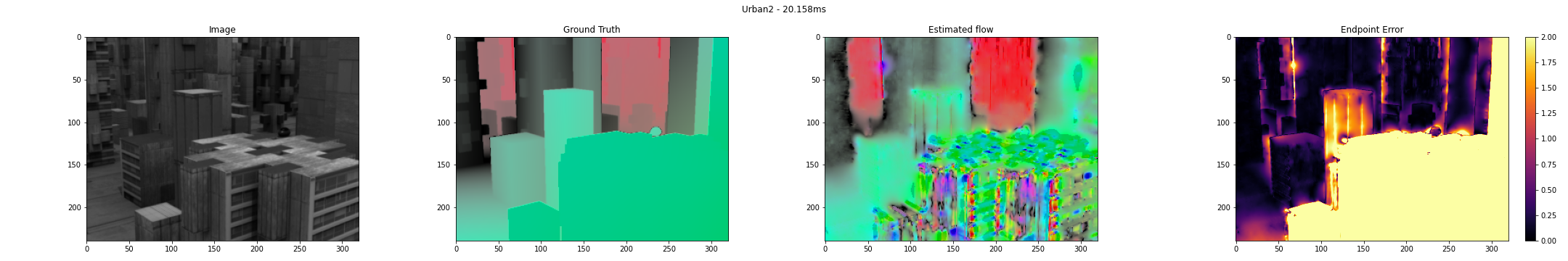

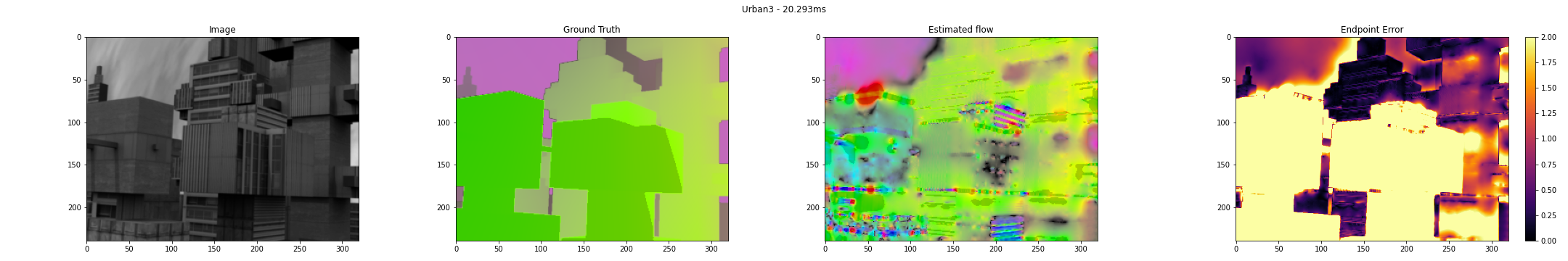

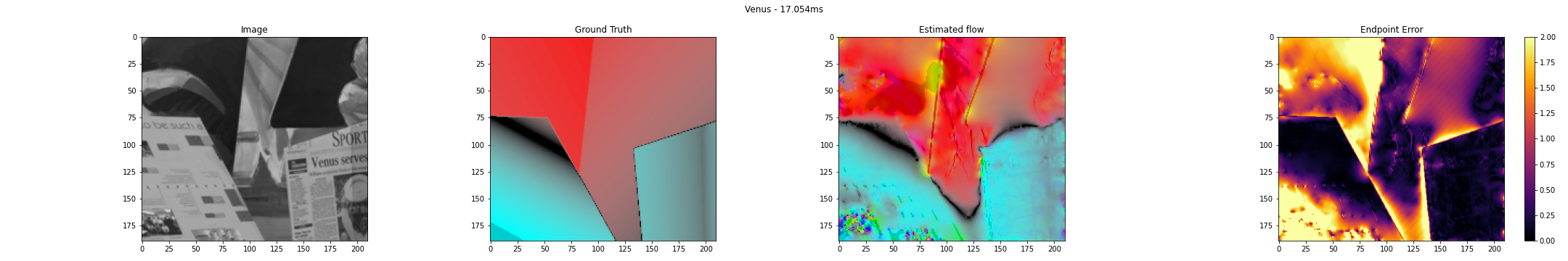

The Middlebury optical flow dataset from Baker et. al. provides several real-life and synthetic image sequences with ground truth optical flow. The figures below shows the estimated optical flow for the test sequences whose ground truth is available.

The Horn ans Schunck algorithm is not well suited for large pixel displacements. Considering this, the input images are scaled to half before entering the compute pipeline. The ground truth flow is scaled accordingly in order to be compared with the estimated flow. The Endpoint Error measures the different in magnitude between the ground truth and the estimation, it is computed as:

$$ EE = \sqrt{(u - u_\text{gt})^2 + (v - v_\text{gt})^2} $$

The algorithm is configured as follows:

alpha: 15.0/255iterations: 2000float_precision: FP32

In general, the estimated optical flow yields acceptable results in image regions with small displacements (e.g. Dimetrodon, Grove2, Hydrangea, and RubberWhale). In image regions with large displacements, the method is not able to compute a good results, as can be visualized in the Urban2 and Urban3 sequences.

The results reported in this post were run on a Razer Blade 2021 Laptop equipped with an Nvidia RTX 3070 GPU. The runtime is reported in the title of each figure, and is in the order of 20 milliseconds for most of the image sequences. Section runtime performance evaluates the performance of the algorithm on different devices, resolutions, and floating point precisions.

Runtime performance

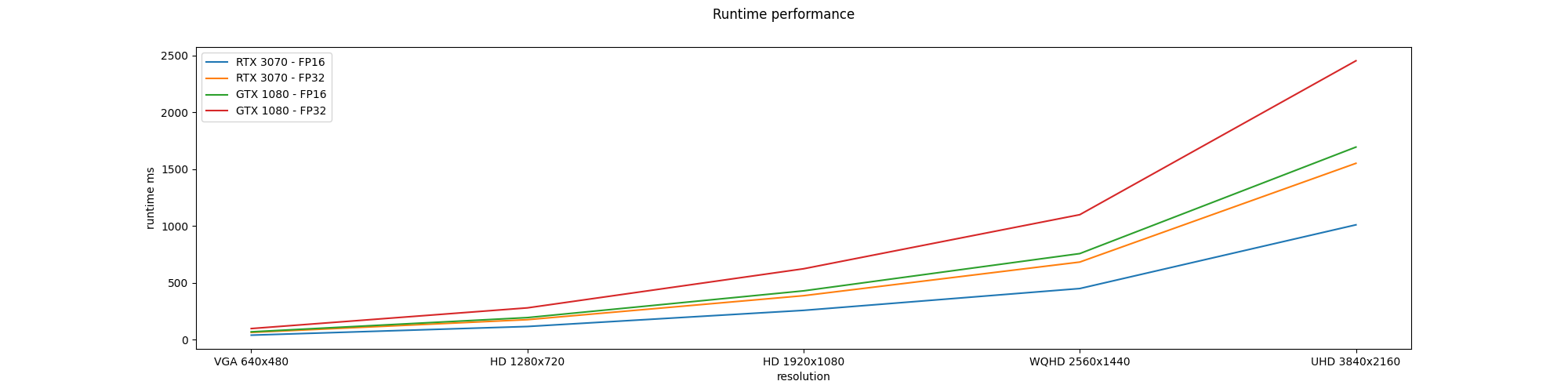

For the runtime analysis of the algorithm, two GPU devices were used:

- A Nvidia GTX 1080 Desktop GPU.

- A Nvidia RTX 3070 Laptop GPU running on a Razer Blade 2021.

The Horn and Schunck pipeline is configured using the same number of iterations used for the Middlebury evalatuon, that is, iterations = 2000. The pipeline is configured for 5 different image resolutions (VGA 640x480, HD 1280x720, HD 1920x1080, WQHD 2560x1440, UHD 3840x2160). For each resolution, the pipeline is run both using FP16 and FP32 floating point precision. The table and figure below show the runtime performance for each configuration.

| Resolution | Float precision | Device | Runtime median (ms) |

|---|---|---|---|

| VGA 640x480 | FP16 | GTX 1080 | 68.8196 |

| RTX 3070 | 39.4354 | ||

| FP32 | GTX 1080 | 97.5005 | |

| RTX 3070 | 63.6458 | ||

| HD 1280x720 | FP16 | GTX 1080 | 193.977 |

| RTX 3070 | 115.626 | ||

| FP32 | GTX 1080 | 279.538 | |

| RTX 3070 | 175.635 | ||

| HD 1920x1080 | FP16 | GTX 1080 | 429.256 |

| RTX 3070 | 257.624 | ||

| FP32 | GTX 1080 | 623.555 | |

| RTX 3070 | 386.718 | ||

| WQHD 2560x1440 | FP16 | GTX 1080 | 757.101 |

| RTX 3070 | 449.536 | ||

| FP32 | GTX 1080 | 1099.35 | |

| RTX 3070 | 682.558 | ||

| UHD 3840x2160 | FP16 | GTX 1080 | 1694.16 |

| RTX 3070 | 1010.16 | ||

| FP32 | GTX 1080 | 2453.45 | |

| RTX 3070 | 1551.34 |

It is not surprising that the RTX 3070 GPU is faster than the GTX 1080, as the former is of a newer generation than the latter.

Discussion

This post presented a GPU implementation of the Horn and Schunck optical flow algorithm. Evaluation in the Middlebury test sequences show the validity of the implementation. A runtime performance analysis was conducted on two GPUs using several image resolutions and floatin point precisions.

Future work includes:

- Implementing a pyramidal scheme, for instance that of Llopis et. al., to improve the accuracy of the algorithm in presence of large displacements.

- Use the smoothness constrain and numerical scheme in the FlowFilter algorithm to improve the accuracy.

References

- Horn, Berthold KP, and Brian G. Schunck. “Determining optical flow.” Artificial intelligence 17.1-3 (1981): 185-203. Google Scholar.

- Baker, S., Scharstein, D., Lewis, J.P., Roth, S., Black, M.J. and Szeliski, R., 2011. A database and evaluation methodology for optical flow. International journal of computer vision, 92(1), pp.1-31. Google Scholar.

- Meinhardt-Llopis, E. and Sánchez, J., 2013. Horn-schunck optical flow with a multi-scale strategy. Image Processing on line. Google Scholar

- Adarve, Juan David, and Robert Mahony. “A filter formulation for computing real time optical flow.” IEEE Robotics and Automation Letters 1.2 (2016): 1192-1199. Google Scholar